Does Defense Matter More in the Postseason?

In Pro Football Prospectus 2006 and 2007, it is shown that defensive performance (as measured by DVOA) correlates more with playoff success than offensive performance. Similar findings have been made in baseball. As correlation is not causation, the question is why offensive performance is seemingly less important. Originally, I hypothesized that defensive performance was less consistent and needed to be better overall so that the worst performances were still good enough to win. That didn't pan out particularly well, so it was back to the drawing board. Today's hypothesis is a little more straightforward.

If offensive efficiency has a higher correlation with regular season wins than defensive efficiency, it means that the offenses in the playoffs are already very good. When everybody is good, then everyone is average. If the quality of defenses isn't as good, however, then teams with good defenses will be more successful in the playoffs.

(The test data is on the 1996-2006 seasons.)

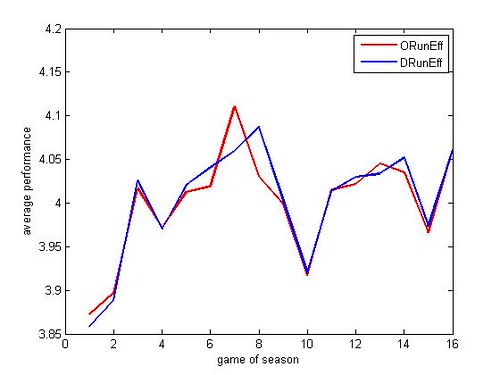

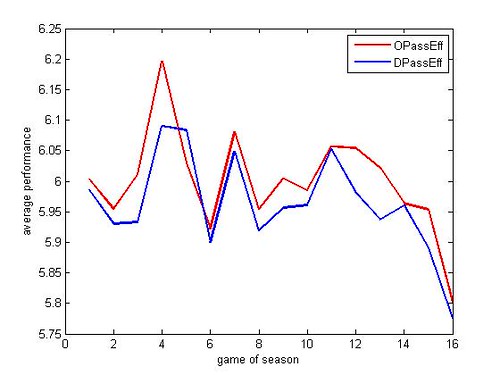

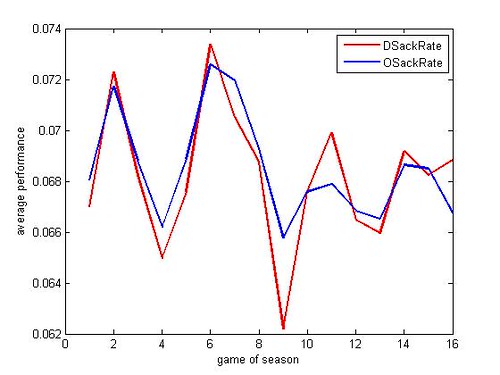

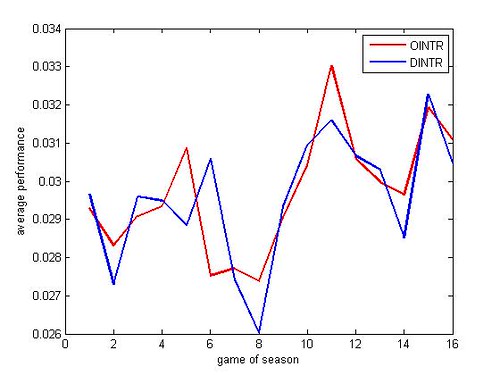

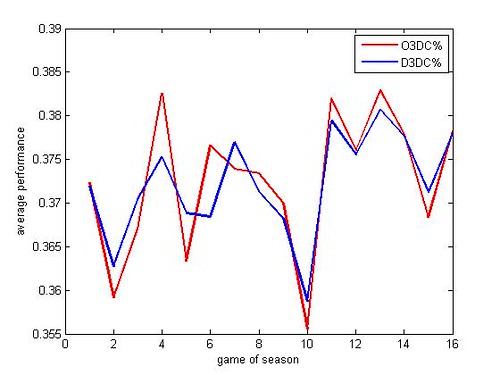

The average efficiencies (yards per play) for playoff teams are as follows:

So by VOLA standards, defenses of playoff teams aren't as good as the offenses. The one caveat is that there are 20+ more instances of Pass Off. VOLA above 10% and 20% than for Pass Def. VOLA. Take that as you will. It might just mean that there are a lot of crappy quarterbacks in the league weighing down the league averages and the very good QBs are also very consistent (Brady, Manning, Culpepper, Warner of the Rams, Green, Elway, Favre).

Let's take a look at the correlation coeffiecients with playoff seedings, specifically 7-seed # for playoff teams only. By the hypothesis, we'd expect the defensive eff. correlations to be higher because the offenses are all very good across the seeds.

The correlation coefficients with 7-(Seed #) listed as Offense, Defense are as follows:

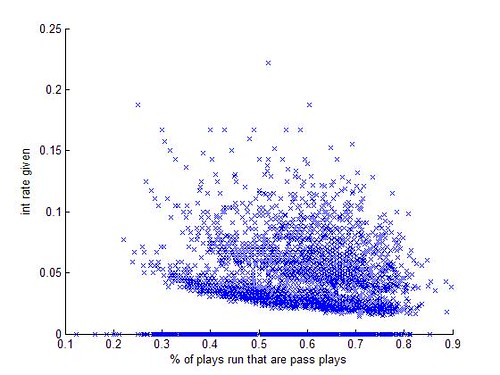

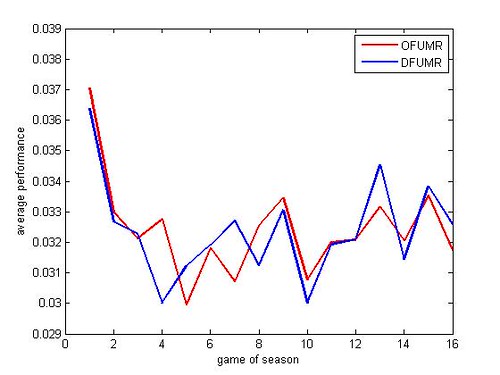

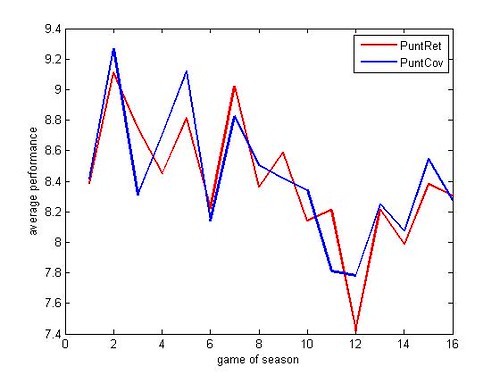

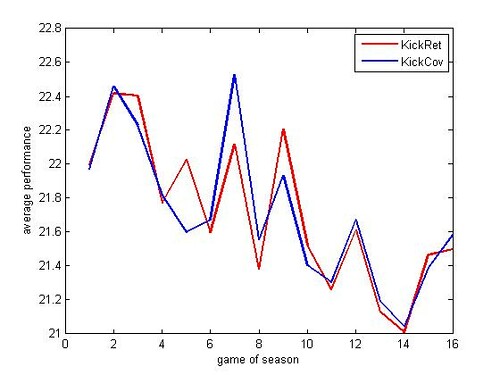

In every category except run efficiency, defensive performance has the higher correlation with playoff seeding. So defense is what sets apart the contenders from the one-and-dones. Creating turnovers is important, but a quarterback who is poor at decision-making plays an important part as well. Stopping drives on 3rd downs and forcing punts is also important. Interestingly, punt return averages have next to nothing correlation with the seeding, but kick return averages have a .18278 correlation. And although generally, none of these correlations are particularly strong, they seem to be strong relative to what you'd see in working with football data. I suspect ranking divisional winners 1, 2, 3 and now 4 cuts down on these coefficients, however.

Of course, seedings do not equal success, but seedings equal home field advantage, which does seem to play a significant part in playoff success. The following table shows the value of home field advantage for the intraconference playoff games (i.e. all games except the neutral-site Super Bowl). I've split it time-wise by the realignment of divisions, which cuts down on the sample size considerably, but there does seem to be an impact.Avg. Result/Home Win % 1996-2001 2002-2006 WC 9.667/79.167% 5.0/60% DIV 11.917/79.167% 6.25/70% CF 3.0833/50% 3.4/60% OVERALL 8.0303/71.212% 5.1818/63.636%

Despite the seemingly larger home field advantage, it's important to note that higher seeding likely means better team. It is the defenses, however, that are making the teams "better," according to my interpretation of the data.Avg. VOLA for Playoff Teams, 1996-2006 Stat Super Bowl Winners Super Bowl Losers Non-Super Bowl Winners RO 0.022576 0.028555 0.014699 RD 0.048716 0.048332 0.007469 PO 0.10837 0.11239 0.081933 PD 0.097542 0.045339 0.042048 SRM 0.043673 0.028613 0.066265 SRA 0.08411 0.11689 0.12584 PR 0.16781 -0.0078771 0.020933 PC 0.040876 0.010033 0.030548 KR 0.046957 -0.0024219 0.0095025 KC -0.02826 -0.020431 0.0022995 3CM 0.12462 0.067547 0.068969 3CA 0.034159 0.044792 0.03235 PFD 0.0064548 -0.053755 0.023301 PY 0.02089 -0.021606 0.018493 IRG 0.1623 0.11482 0.087693 IRT 0.27941 0.095794 0.053809 FRG 0.091916 0.071552 0.11377 FRT 0.19085 0.095611 0.054136

The stats where the Super Bowl winners had a noticably higher average than the rest of the playoff teams were: Run Defense, Pass Defense, Punt Return, Third Down Conversion Rate Made, Interception Rate Given, Interception Rate Taken, and Fumble Rate Taken. Tangential but interesting: 1996 Green Bay, 1997 Denver, 2000 Baltimore, 2001 New England, and 2005 Pittsburgh had 25%+ VOLA on punt returns, while besides 2004 New England (-32.4%) and 1998 Denver (-2.16%), no Super Bowl winner had negative VOLA on punt returns. Back on point: the defensive efficiency on run and pass plays are much higher for Super Bowl teams than other playoff teams (4.87% vs. 0.007% run, 9.75% vs. 4.20% pass), but the gap in offensive pass efficiency isn't as large (10.837% vs. 8.193%). The pass offenses of playoff teams are good to begin with. Defenses of playoff teams aren't necessarily better.

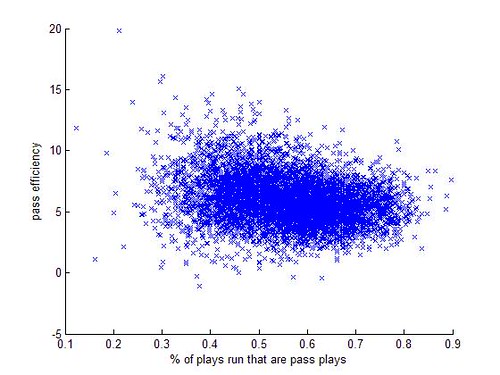

Another way to look at it is who won with high VOLA and who won with low VOLA. Of the 11 Super Bowls looked at, only the 2000-2 winnners (BAL, NE, and TB) won with below average pass offense efficiency. Only the Patriots in 2001 won with below average pass defense efficiency. Of the 22 Super Bowl teams, 12 had 10%+ VOLA in pass offense (6 winners and 6 losers). In the same time frame, 73 teams had 10%+ VOLA, 59 of which made the playoffs. Eight teams had 10%+ VOLA in pass defense (4 winners and 4 losers), but only 47 teams had 10%+ VOLA in the same time frame, 34 of which made the playoffs. So 80.822% of teams with 10%+ VOLA in pass offense make the playoffs, and 16.438% of which make the Super Bowl. On the other hand, 72.34% of teams with 10%+ VOLA in pass defense make the playoffs, while 17.021% of those teams make the Super Bowl. The percentages show that a good offense will get you to the playoffs, but it needs to be balanced with a good defense in order to reach the Super Bowl.

That very good pass offenses are much more common than very good pass defenses is surprising and disconcerting. As a sanity check, I took a quick look at FO's 2006 DVOA standings. Eleven teams had 10%+ DVOA for pass offense, while only six teams had 10%+ DVOA for pass defense. The same is not true for rush efficiency. The surprising conclusion is that very good offenses are more common than very good defenses. The million dollar question is why they are more common. Perhaps offenses are easier to build because one man can make such a large difference in offenses (QB or RB), and one man cannot make such a difference in defenses. Brady never had great receivers (nor a great running back), but his skills led them to 3 Super Bowl wins. Though you might argue that Bob Sanders had a large impact last postseason as well.

Actually, the Colts also faced imbalanced teams in the 2006 playoffs. The Chiefs had an above average offense but below average defense and a worn out running back. The Ravens had a great defense but a so-so offense (6.26% Pass Off. VOLA, -17.275% Rush Off. VOLA). The Bears had a great defense but a below average offense. The only team the Colts faced in the 2006 playoffs with some amount of balance was the New England Patriots (#7 Off DVOA, #8 Def DVOA).

In conclusion, very good offenses are more common and more important to regular season wins, so playoff teams have good offenses on average to begin with. Consequently, teams with balance on both sides of the field are more likely to win.

Mind you, 11 seasons is a small sample size, but the initial results merit more research. In the future, I hope to expand on the sample size back a couple decades at the expense of dimensionality (restricted to simple rush/pass yards per play measurements) and revisit the last decade using DVOA.